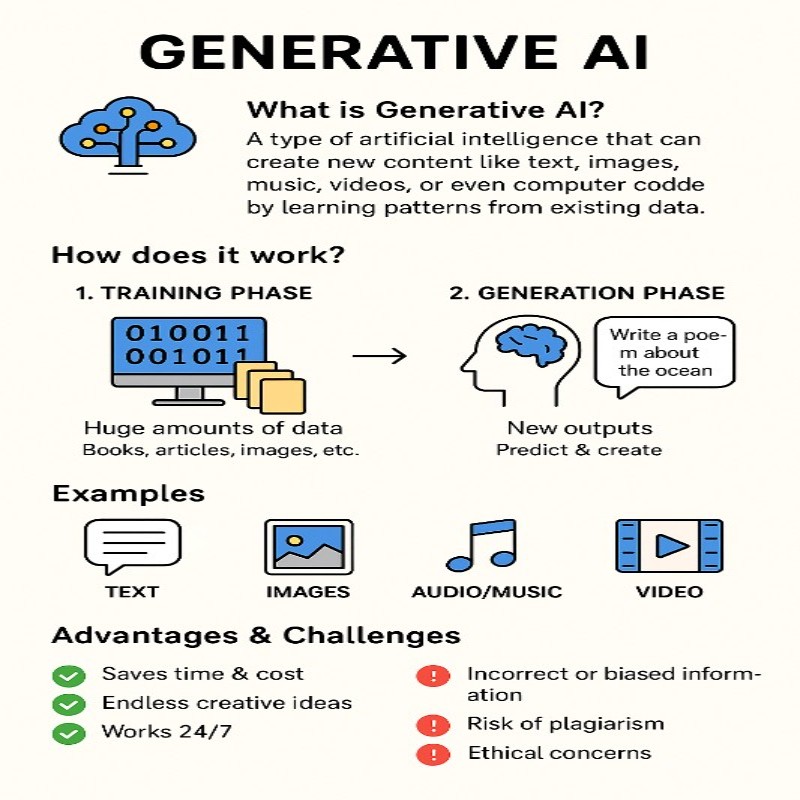

What is Generative AI?

Generative AI is a branch of artificial

intelligence that can produce entirely new and original content—such as text,

images, audio, video, or even 3D designs—often so realistic that it is

difficult to distinguish from human-created work.

Unlike traditional AI, which mainly analyses data,

generative AI learns patterns from existing data and then generates new

data that resembles it.

Core Technology behind Generative AI

Generative AI relies on deep learning, a

specialized area of machine learning (ML) that uses neural networks with

multiple layers to model complex patterns. While machine learning is broad,

deep learning is particularly effective for generative tasks, such as creating

realistic images, producing human-like text, or composing music.

A central component of generative AI is the Large

Language Model (LLM). LLMs are self-supervised machine learning models

trained on massive amounts of text data using natural language processing

(NLP). They can understand, process, and generate human-like text. First large language model is GPT, stands for

Generative Pre-Trained Transformer.

Popular Generative AI Tools

- Chatbots: ChatGPT, Copilot, Gemini,

Claude, Grok, DeepSeek

- Text-to-Image

Models:

Stable Diffusion, Midjourney, DALL·E

- Text-to-Video

Models:

Veo, Sora

Leading Companies in Generative AI: OpenAI, Anthropic, xAI,

Microsoft, Google,

Applications of Generative AI

- Content

Creation

- Text:

Articles, stories, poems, or computer code

- Images:

Realistic artwork or edits from text prompts

- Audio:

Music, sound effects, or speech synthesis

- Video:

Short clips generated or edited from descriptions

- Code:

Auto-generating software programs based on user prompts

- Other

Uses: 3D

models, synthetic datasets for research, data augmentation

- Healthcare: Generating synthetic

medical images for study

- Education

& Research:

Creating additional datasets for AI training

Challenges of Generative AI

- Deepfakes: Fake videos and images

that can mislead viewers

- Bias: Reproduction of bias

present in training datasets

- Authenticity: Difficulty in

differentiating between real and generated content

- Ethical

Issues:

Ownership, copyright, and intellectual property concerns

- Job

Impact:

Potential large-scale replacement of human roles

- Cybersecurity

Risks:

Misuse for cybercrime

How Generative AI Works

- Trains

on large datasets (text, images, audio, video, etc.)

- Learns

underlying patterns, structures, and styles from the data

- Uses

this knowledge to generate new, consistent, and realistic outputs when

prompted

Historical Development of

Generative AI

- 1906 – Markov Chains by

Andrey Markov, used for probabilistic text generation

- 1970s – AARON by Harold

Cohen, a program that generated paintings

- 1980s–1990s – Generative AI Planning,

symbolic AI for action sequencing

- 2000s – Growth of Deep

Learning Models

- 2014 – Development of Variational

Autoencoders (VAE) and Generative Adversarial Networks (GAN)

- 2017 – Introduction of the Transformer

Network, enabling more advanced models

- 2018–2019 – Launch of GPT and GPT-2,

the first large-scale LLMs

VAE, GAN, and Transformers are considered the core

architectures of modern generative AI.

Key Milestones in Generative AI

Tools

- 2021 – OpenAI released DALL·E

for image generation

- 2022 – Midjourney

launched, specializing in text-to-image graphics

- Nov

2022 – ChatGPT

by OpenAI marked a turning point in AI adoption

- Mar

2023 –

Release of GPT-4 by OpenAI

- 2023 – Meta introduced ImageBind

(multi-modal AI); Google released Gemini (Ultra, Pro, Flash, Nano)

- Mar

2024 –

Anthropic launched Claude 3

- Jul

2025 –

OpenAI unveiled GPT-5

Author

Anjali Pawar (Developer)